Will AI Replace Traditional Interfaces in the Next 5 Years?

My by-no-means-comprehensive introduction to Generative UI and the value it could add to a customer experience.

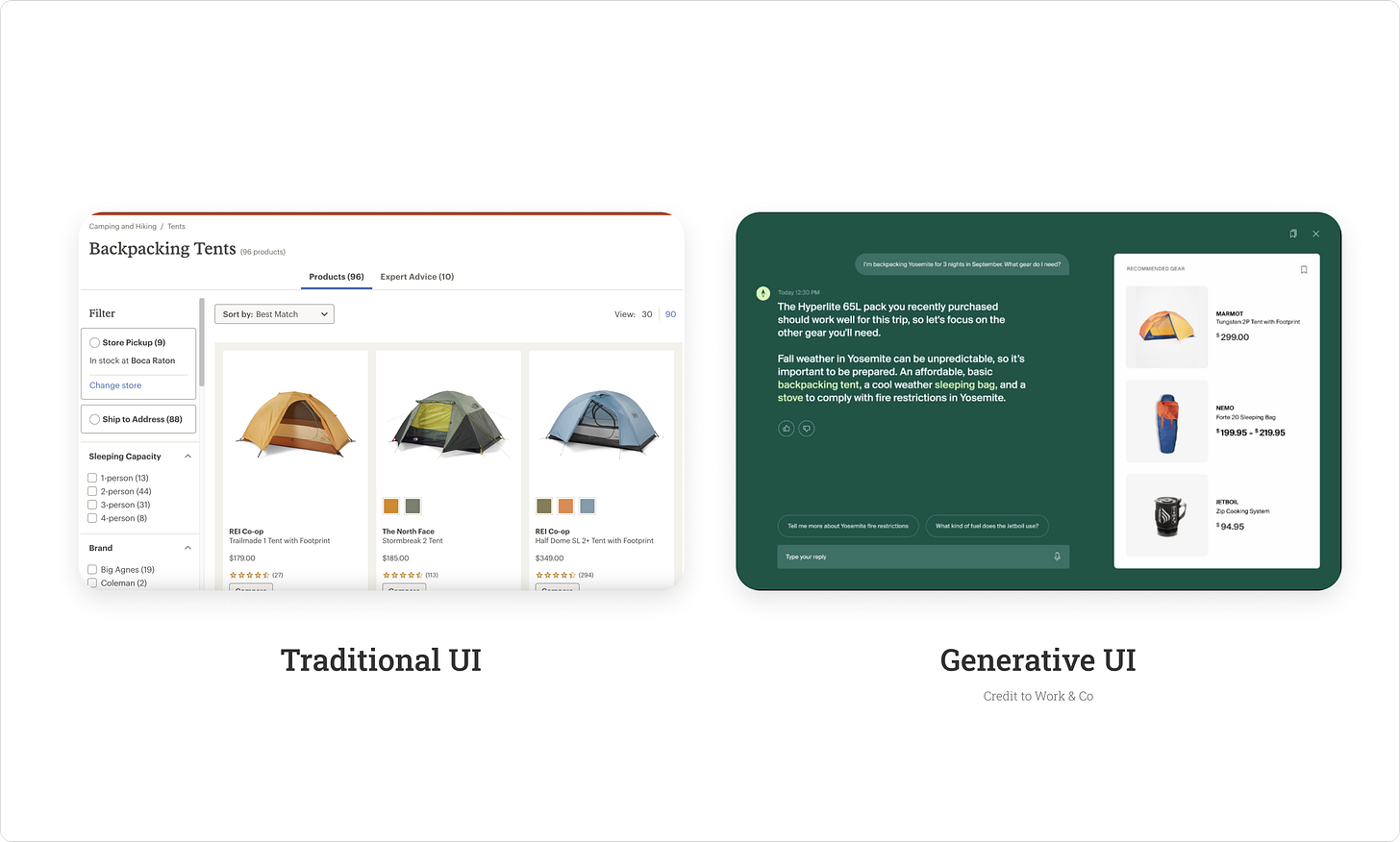

Artificial Intelligence (AI) is going to redefine how we interact with digital experiences. Imagine planning trips, researching presidential candidates, selecting outdoor gear, or choosing the right investments, all without the deep internet research, 20 tabs open, and daunting decisions. Traditional interfaces cater to broad user groups, sometimes millions of people, but what if these websites or apps or search engines could adapt to you personally, understanding your unique needs and preferences?

Something interesting that has been coming up a lot recently is Generative UI, which is a really cool concept enabled by advancements in Large Language Models (LLMs). It goes beyond just generating content like we’ve seen in early versions of ChatGPT, and even beyond generating your own website with AI. It’s more about creating full interfaces uniquely tailored to each individual at any moment. Whether you’re exploring a new online store or using a sports app, Generative UI creates an interface on the fly, ensuring the information it gives you is precisely what you need, when you need it.

I’ve seen a lot of content floating around about how “the web is finished” and “we won’t need apps anymore” because AI will just give us everything exactly how we need it. That’s a bit extreme, but… maybe?

It’s too soon to know exactly how people will interact with Generative UI experiences, or even what will become of these experiences. The good news is, big players like Work & Co, Vercel, The Browser Company, Quora, Microsoft, Google, Jakob Nielson, and many more are exploring the use cases of Generative UI and the impact it can have. Let’s dive into why it has so much potential to rethink how we’re designing and using software.

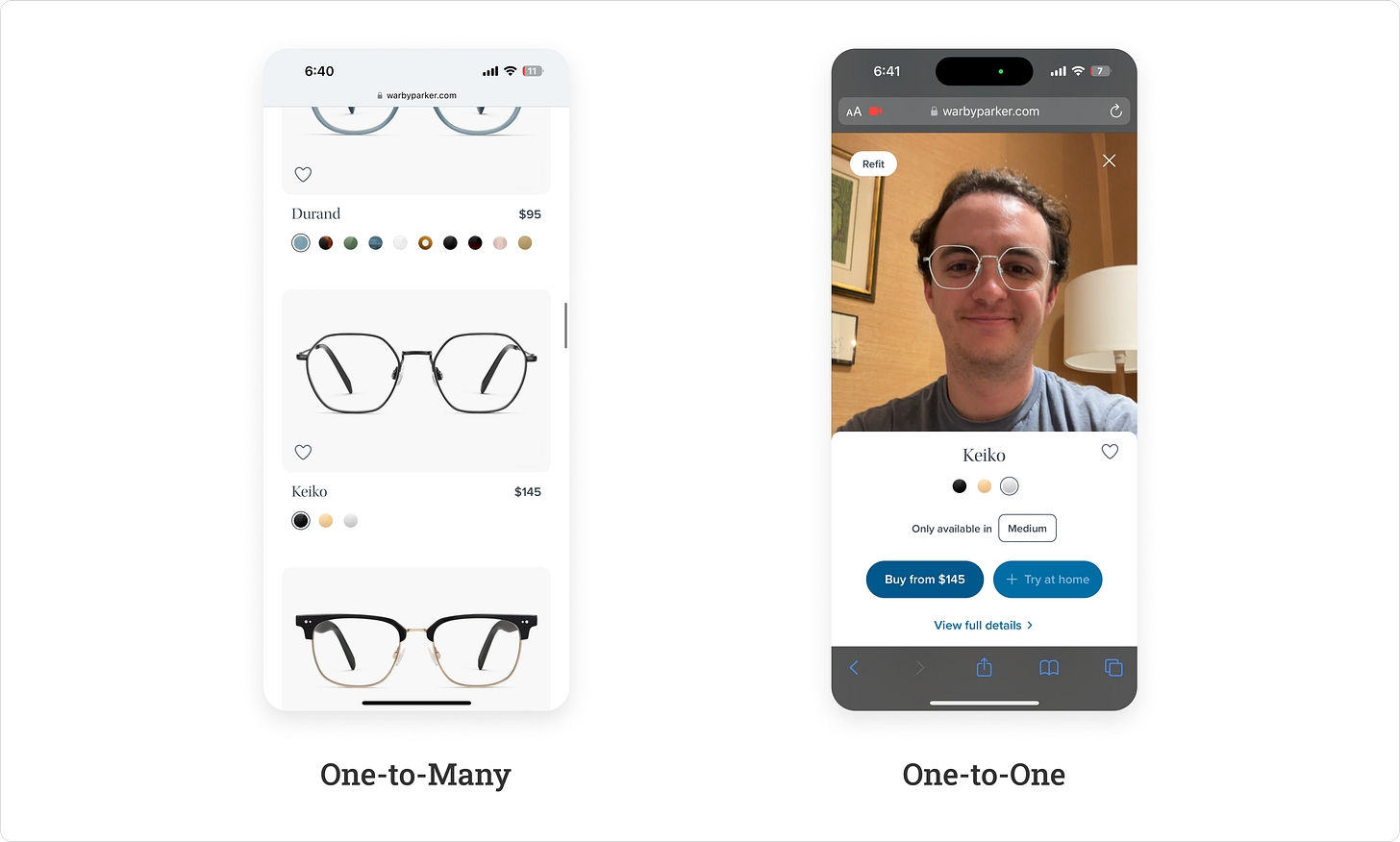

Moving from One-to-Many to One-to-One

In the past few years, more and more digital experiences are able to move from “one-to-many” experiences to “one-to-one” because of more advanced machine learning models. What that means is, instead of one thing catering to many different people, that one thing will be specific to the one person using it.

A well-known example is Warby Parker’s AR Glasses Try-On. Before, there was a “one-to-many” experience of just going to the same website and looking at glasses on the screen, but Warby Parker recognized that being able to see what I look like with glasses on is the biggest factor affecting my buying decision. Now, when I go to their site, I can try on any of their glasses and see (pretty much) exactly what I’d look like wearing them. Another example is being able to see what artwork looks like on your wall. The experience is unique to you, because nobody else shares your wall unless you have roommates, of course.

Augmented and Virtual Reality have become really popular over the last few years because the average consumer can have a truly personalized experience. But what about more traditional 2D interfaces, like the one you’re reading this newsletter on right now? Obviously, the idea of an individualized experience is already fairly common—like Tik Tok’s “For You” page or “Recommended For You” on Netflix or “Other Items You Might Like” when looking at a shirt online. But these interfaces are still determined beforehand, and that’s the key difference. The context is extremely limited, only based on a few clicks or interactions. Generative UI takes this to the next level by generating an interface on the spot for you based on what you need at that moment.

Thanks to LLMs, we’re getting true one-to-one unique experiences every time we open ChatGPT or Bing Search or Anthropic’s Claude. Natural language interfaces have become increasingly useful, if you know how to use them.

Natural Language Interfaces as a Precursor to Generative UI

Natural Language interfaces like ChatGPT, where the user just types anything into a text-box and gets a custom response generated from an AI model, have shown just how powerful this new way of interaction can be. I can prompt it with anything and it gives me what it determines is the most useful response. These chatbots are a huge push towards one-to-one experiences, but I don’t think it’s even close to the final destination.

When you introduce this kind of input from the user and response from a computer, we move from a traditional deterministic system of “I click this button and X happens, that button and Y happens” to a probabilistic model of “I type this sentence and an uncountable amount of things could happen.” This isn’t necessarily a bad thing, in fact it could be a good thing if you learn how to write really great prompts.

But, as another newsletter I follow put it really well: “There is nothing, I repeat nothing, more intimidating than an empty text box staring you in the face.” Natural language, it turns out, is not that natural. In fact, a whole new field of “prompt engineering” has arisen from people who are becoming skilled in knowing how to talk to these AI models. Imagine that, it requires a lot of skill and tricks to get these “smart” chatbots to do what you want them to.

Why is that? AI models only have so much context when a user interacts with it. It can’t see gestures or the way we wave our hands. It can’t really detect the tone in our voice, although you can talk to AI models now. It doesn’t know what we’re thinking and what preconceived notions we already have. It doesn’t know that the way I speak or write is far different from the way someone else does. It takes a bunch of words you give it, and determines the most probable thing to say back. Even the slightest nuances in prompts, like a comma or synonym, can completely change the response.

Generative UI with Guidance, Multi-Modality, and Fluid Interfaces

The best interfaces in the future will surely leverage AI to provide a more personalized experience, but these experiences will become more robust to both include more context and also guide the user through the journey. They will be able to incorporate multiple inputs and outputs and dynamically serve content specific to an individual vs. a more general experience.

Incorporating multiple inputs and outputs in this way is called multi-modality, which has already been introduced in a lot of the more popular models. The idea is that you can provide more context with images, files, voice, itineraries, etc., and the model will interpret all of this information. On the other side, when you ask a question or prompt the AI model, it can cater not just text to you but links, images, product recommendations, full dynamic web pages, and other complex structures of data that specifically answer your question given your intent.

The experiences that leverage these models are therefore able to become a lot more powerful, and personalized to the user. A great example is the new Arc Search app. You can search for something just as you would on Google, and it utilizes Generative UI to create a completely custom webpage on the fly with links, recipes, whatever it determines you might need to see.

Another great example is Vercel’s new AI SDK 3.0. It shows a demonstration of how a user can ask for stock advice and instead of just getting a text response, Vercel’s interface generates more useful visualizations and options to guide the user through the buying experience.

One last example you can check out is Work & Co’s Vision for AI Driven Commerce. They make an argument that these chat interfaces promise a transformative shift towards highly personalized and efficient digital experiences. They will enable quicker access to content, smarter chatbots, dynamically tailored user experiences based on individual preferences and contexts, and cost-effective experimentation for brands, enhancing competitive advantage and user engagement.

Whether it’s a new user or experienced user, each of these examples guide them through some familiar pattern in some way. But how can we as designers achieve this Generative UI? All three of the above examples looked pretty different. How can we design for all of the possible outputs from our LLMs? The answer is design systems, something we’ve been using since the beginning of digital interfaces.

Design Systems as tool for Generative UI

What did all of the above examples have in common? They’re all generally in the style of that given brand. As a user, I know where I am and what I am interacting with. I’m guided through the experience. With these new experiences driven by Generative UI, we start to design for intent. My buttons may look different from your buttons, and my thumbnails may have a different border. The tone of my chatbot may be different from yours.

We have these systems that start with the smallest, atomic elements, and build components out of them. Our LLMs will utilize these design systems to cater information to the user in real time, but utilizing the right styles, components, language, etc.

As we move more towards this “modular” design, where interfaces can be built on the fly using the building blocks we specify, design systems will become even more significant for the user experience. We’ll create a system of components that dynamically shows the user the content they may want to see and generate a UI to display information in a way that makes the most sense at that moment. We won’t just be designing for likely user journeys anymore, we design for many, many probable cases instead of just a small handful.

How We Can Approach Generative UI Over Time

Provide it as an option. Work & Co gives a good example of this. Don’t throw it in the user’s face and expect them to engage with it, but rather prompt the user with “We see you’re going to Barcelona! We can help you out.” Allow the user to find their way to it.

Show users the value of this new type of interface over traditional ones by helping them find the right information faster. While LLMs still cannot provide perfect information every time they’re prompted, a key to these experiences will be to provide the right amount of guidance to the user even in these natural language interfaces to help get as close as possible to their goal.

Start with a hand-holding experience and as users become more comfortable you can remove some of the training wheels (known as progressive reduction). It’s key to get people familiar with this new interaction model, where really anything could happen and not even we can control if the response they get is the right one.

Understanding where it makes sense to open up to a probabilistic model and where it doesn’t will help to determine the best way for your team to utilize it in your tool or website. Some great opportunities are for large retailers or e-commerce sites—being able to cater the best products for that user out of thousands of SKUs will lead to faster decisions and increased conversion. On the contrary, Generative UI in healthcare or legal fields may not be appropriate right now because those require precise and standardized information, where the unpredictability of LLMs could present major issues.

Navigating the Future with Generative UI

So, will AI replace traditional interfaces over the next 5 years? Generative UI presents an amazing opportunity for companies to continuously improve their buying experience with very small marginal design cost.

Looking ahead, the real question isn't if AI will replace traditional interfaces, but how Generative UI will complement and enhance our digital experiences. It promises scalability, personalization, and efficiency that could really improve user engagement. I think as major players mentioned earlier continue to develop design principles and patterns for Generative UI, it will definitely pick up traction.

At the end of the day, Generative UI will enable us to consolidate large amounts of information and empower users to get the answers they need more quickly, saving time, hassle, and boosting their confidence.

What do you think about the prospective value of Generative UI? How could you see your product or site utilizing it? I’d love to hear your feedback on this newsletter and discuss Generative UI further. There’s always so much more to learn, and I’m always eager to meet new people in this area! Please feel free to leave a comment or connect with me on LinkedIn or Twitter!

Thank you,

Jake

Sources:

Accessibility Has Failed: Try Generative UI = Individualized UX

Brilliant! and very understandable.